Internal API Documentation with Docusaurus

Introduction

In our previous blog posts, we extended Docusaurus with API documentation powered by Redoc and enhanced the design with Tailwind CSS and shadcn/ui. Now, we want to ensure that the documentation itself is only accessible to the right audience.

Internal APIs, early-stage specifications, or partner integrations often contain information that should not be visible

to everyone. By default, Docusaurus behaves like any other static site: it simply serves files to anyone who

can reach the URL. That means routes like /api or openapi.yaml are public by design.

To solve this, we’ll add an authentication layer in front of Docusaurus. Our tool of choice is OAuth2 Proxy, a lightweight middleware that integrates seamlessly with popular identity providers (IdPs) such as Google, GitHub, or Keycloak. In this article we’ll use Keycloak as the example IdP, but the general approach remains the same for other providers as well.

The focus here is on the developer setup: running Docusaurus inside Docker, proxied through Nginx, and protected via OAuth2 Proxy. At the end, we’ll also point out what needs to change when deploying the same setup to production.

Running Docusaurus behind OAuth2 Proxy

For development we want a full-featured Docusaurus dev server with hot reload and WebSocket updates, and authentication in front of it so not everyone can see our API docs.

We’ll use Docker Compose to orchestrate three services:

- docusaurus-dev: runs the dev server

- oauth2-proxy: handles login with Keycloak and issues secure cookies

- nginx: acts as a reverse proxy, forwarding traffic either to Docusaurus or to oauth2-proxy, depending on the route

The Compose file looks like this:

services:

nginx:

image: nginx:1.27-alpine

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf:ro

networks:

- docusaurus

ports:

- "80:80"

depends_on:

- oauth2-proxy

- docusaurus

docusaurus:

image: node:24

working_dir: /opt/docusaurus

volumes:

- .:/opt/docusaurus

command: sh -c "npm install && npm run start -- --host 0.0.0.0 --poll 1000"

networks:

- docusaurus

ports:

- "3000:3000"

oauth2-proxy:

image: quay.io/oauth2-proxy/oauth2-proxy:v7.12.0

extra_hosts:

- 'localhost:host-gateway'

environment:

OAUTH2_PROXY_PROVIDER: "keycloak-oidc"

OAUTH2_PROXY_CLIENT_ID: "docusaurus"

OAUTH2_PROXY_CLIENT_SECRET: "<top-secret>"

OAUTH2_PROXY_OIDC_ISSUER_URL: "http://localhost:8080/realms/myrealm"

OAUTH2_PROXY_REDIRECT_URL: "http://localhost/oauth2/callback"

OAUTH2_PROXY_COOKIE_DOMAIN: "localhost"

OAUTH2_PROXY_COOKIE_NAME: "docusaurus_dev_auth"

OAUTH2_PROXY_COOKIE_SAMESITE: "lax"

OAUTH2_PROXY_COOKIE_SECRET: "<cookie-secret>"

OAUTH2_PROXY_COOKIE_SECURE: "false"

OAUTH2_PROXY_REVERSE_PROXY: "true"

OAUTH2_PROXY_SET_XAUTHREQUEST: "true"

OAUTH2_PROXY_EMAIL_DOMAINS: "*"

OAUTH2_PROXY_WHITELIST_DOMAIN: "localhost"

command: ["--http-address=0.0.0.0:4180"]

networks:

- docusaurus

networks:

docusaurus:

driver: bridge

How to generate a strong cookie secret is described in the OAuth2 Proxy documentation: https://oauth2-proxy.github.io/oauth2-proxy/configuration/overview#generating-a-cookie-secret

Nginx Configuration

The Nginx config is the glue. It needs to:

- protect

/redocand/specroutes withauth_request - forward

/oauth2/*to the proxy - proxy all other traffic to the Docusaurus dev server

- support WebSockets for hot reload

Here’s the relevant part:

events {}

http {

# Conditional Connection header for WebSocket upgrades

map $http_upgrade $connection_upgrade {

default upgrade;

'' close;

}

server {

listen 80;

# Webpack/Vite/DevServer HMR WebSocket endpoints

# Ensure these take precedence over regex locations below

location ^~ /ws {

proxy_pass http://docusaurus:3000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_read_timeout 1h;

proxy_send_timeout 1h;

proxy_buffering off;

}

location ^~ /sockjs-node {

proxy_pass http://docusaurus:3000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_read_timeout 1h;

proxy_send_timeout 1h;

proxy_buffering off;

}

location / {

proxy_pass http://docusaurus:3000;

proxy_http_version 1.1;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

}

# Protect /redoc + /spec/openapi.yaml with oauth2-proxy

location ~ ^/(redoc|spec) {

auth_request /oauth2/auth;

error_page 401 = @error401;

error_page 403 = @error401;

proxy_pass http://docusaurus:3000;

auth_request_set $auth_user $upstream_http_x_auth_request_user;

auth_request_set $auth_email $upstream_http_x_auth_request_email;

proxy_set_header X-User $auth_user;

proxy_set_header X-Email $auth_email;

}

# oauth2-proxy endpoints (login, callback, logout)

location /oauth2/ {

proxy_pass http://oauth2-proxy:4180;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Auth-Request-Redirect $request_uri;

proxy_set_header Cookie $http_cookie;

}

# oauth2-proxy → internal auth check

location = /oauth2/auth {

internal;

proxy_pass http://oauth2-proxy:4180;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-Uri $request_uri;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Cookie $http_cookie;

# nginx auth_request includes headers but not body

proxy_set_header Content-Length "";

proxy_pass_request_body off;

}

# Handle unauthenticated users (preserve original URL)

location @error401 {

return 302 /oauth2/start?rd=$request_uri;

}

}

}

Keycloak

Before starting the services with docker compose up, we need an identity provider. In this example we’ll use Keycloak, since it is a well-supported open source solution and integrates seamlessly with OAuth2 Proxy.

Running Keycloak locally in Docker is straightforward:

docker run \

-p 127.0.0.1:8080:8080 \

-e KC_BOOTSTRAP_ADMIN_USERNAME=admin \

-e KC_BOOTSTRAP_ADMIN_PASSWORD=admin \

-v ./keycloak/:/opt/keycloak/data/ \

quay.io/keycloak/keycloak:26.3.4 start-dev

This command starts Keycloak in development mode with a predefined admin user. You can now log into the admin console at

http://localhost:8080 using admin/admin.

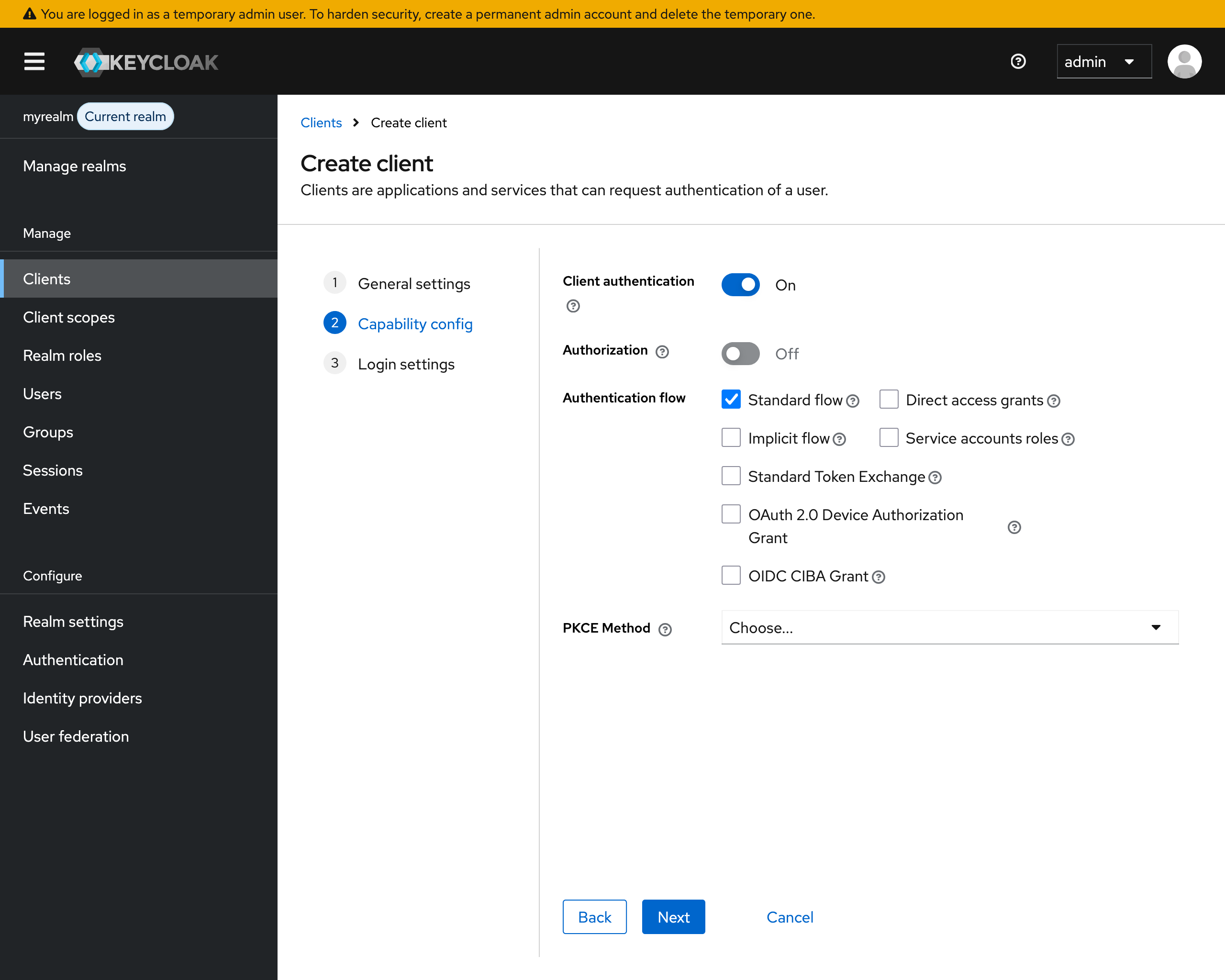

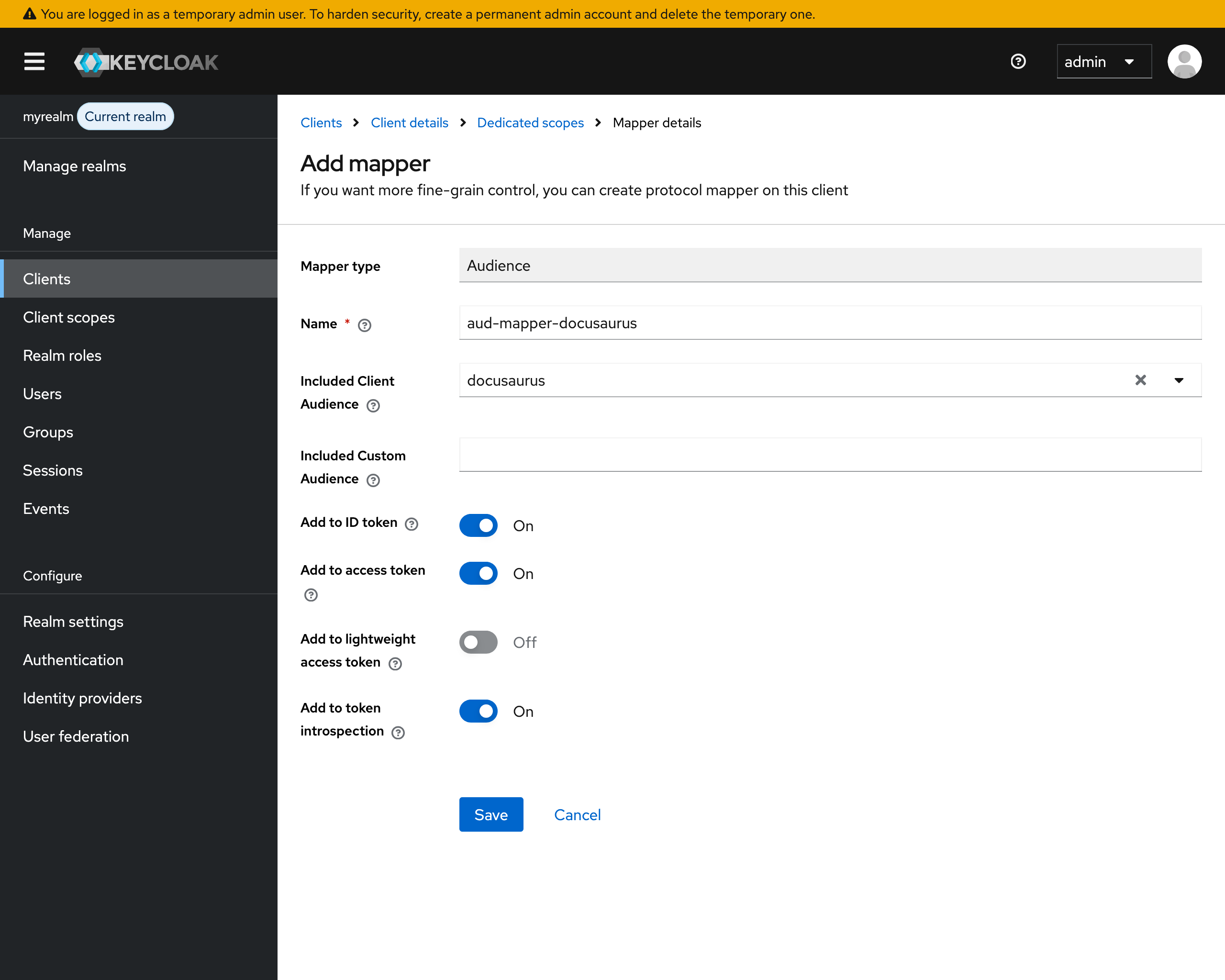

Inside the console, create a realm myrealm and add a client called docusaurus. Make sure the client has the correct

redirect URI configured: http://localhost/oauth2/callback

On the oauth2-proxy documentation site

you’ll find a minimal example of how to configure the Keycloak OIDC client in detail. Make sure to copy the Client ID

and Client Secret from the Keycloak admin console and update the corresponding environment variables of the oauth2-proxy

service in your docker-compose.yml.

If you encounter a 500 error during login, check both the Keycloak server logs and the oauth2-proxy logs. A very common issue is a mismatch between the redirect URI configured in Keycloak and the one used by oauth2-proxy. Another pitfall is missing user attributes: oauth2-proxy expects a verified email address in the ID token. If the email field is empty or unverified, login will fail.

Adding Login/Logout Links to the Footer

To keep authentication visible to the user, we want to extend the Docusaurus footer. The footer should either show a login link or the currently logged-in user with a logout option. The user info can be fetched from a built-in endpoint of oauth2-proxy.

import React, { useEffect, useState } from 'react';

import Footer from '@theme-original/Footer';

export default function FooterWrapper(props) {

const [user, setUser] = useState(null);

useEffect(() => {

fetch('/oauth2/userinfo', { credentials: 'include' })

.then(res => res.ok ? res.json() : null)

.then(data => setUser(data))

.catch(() => {});

}, []);

return (

<>

<Footer {...props} />

<div style={{ textAlign: 'center', padding: '1rem' }}>

{!user ? (

<a href="/oauth2/start">Login</a>

) : (

<>

Logged in as {user.email || user.user} |{" "}

<a href="/oauth2/logout">Logout</a>

</>

)}

</div>

</>

);

}

Debugging and Common Pitfalls

During setup you may run into a few recurring issues:

-

403 vs 401

If you see

403 Forbiddeninstead of being redirected to login, check whether your Nginxauth_requestblock is configured correctly. -

Empty headers

Make sure

OAUTH2_PROXY_SET_XAUTHREQUEST=trueis set. You can confirm by runningcurl -i http://localhost/oauth2/authand checking forX-Auth-Request-*headers. -

Hot reload not working

WebSocket upgrade headers are essential in the

/proxy block, otherwise Docusaurus dev server will silently break. -

Cookies in production

Always set a unique cookie name (

OAUTH2_PROXY_COOKIE_NAME) for production, otherwise dev and prod sessions may conflict.

Notes on Production

For production the general setup remains the same, with a few differences:

- Instead of running the Docusaurus dev server, build the site (

npm run build) and serve the static files directly from Nginx. - Use a different cookie name than in dev, ideally prefixed with your project or environment.

- Consider running oauth2-proxy with HTTPS in front (or terminate TLS at a load balancer).

We’ll cover a production-ready deployment including container images and CI/CD integration in a dedicated article.

Conclusion

With this setup, your Docusaurus site remains just as convenient to work with in development, while already enforcing

authentication on sensitive routes like /api and /spec. Using OAuth2 Proxy keeps the solution flexible, since you

can switch identity providers with only a few configuration changes.

This closes the gap between a simple static site generator and the requirements of internal API documentation.